Want to get GenAI right? Start with how your people use it

Your colleagues are using GenAI right now—but probably not in the way your IT team intended. From data leaks to app overload, organizations are learning that enabling GenAI isn’t just about buying a license—it’s about rethinking policy, trust, and productivity. Here’s what we’ve learned.

Understanding GenAI Usage in Organizations: A Framework

Before we dive into the statistics, it's helpful to establish a framework as a backdrop. At NROC Security, we categorize GenAI usage in organizations into three key buckets:

- Personal Productivity

This refers to individual employees using GenAI tools to enhance their personal work efficiency—for example, drafting emails, summarizing documents, or generating ideas (and improving readability of this blog posting) - Business Process Productivity

Here, AI is embedded into workflows—often via SaaS platforms—to improve operational efficiency. Think of AI-powered automation in customer support or CRM assistant. - Strategic AI

Strategic AI involves organization-wide initiatives where AI is used to gain competitive advantage. This could include proprietary model development, custom agent deployment, or integrating AI deeply into core products and services. This is typically where companies allocate their AI resources.

The Rise of Personal Productivity Use

Of these categories, we’re seeing the biggest momentum right now in personal productivity. Many enterprises are still navigating the early stages of enabling access to GenAI applications for their employees.

As recently as late 2024, the prevailing assumption in many companies was that a single, standardized AI tool would meet everyone’s needs. Organizations often chose one GenAI app—either hosted internally or via an enterprise license—and expected and hoped employees to work within those constraints.

But that thinking is shifting. Narrowly focused apps are emerging with greater accuracy and fewer compliance issues, making them more suitable for specific use cases. Meanwhile, business users continue to invent new GenAI use cases daily—use cases they can’t yet fully define, let alone standardize across a large workforce.

The Data Privacy Barrier

Adoption, however, is not without friction. One major roadblock are still concerns about data leakage. And rightly so—our analysis at NROC Security shows that 37 out of every 100 prompts include personally identifiable information (PII).

Most corporate Acceptable Use Policies (AUPs) prohibit entering PII into public LLMs and require users to stick to vetted apps. This, unfortunately, means someone has to manually approve each app—an administrative burden that slows down innovation. And, as users are not accepting slowing down the innovation they go around the rules and guidance.

As a result, 69% of GenAI chatbot usage still happens via free or personal accounts—not enterprise ones. This is despite the fact that corporate versions often come with privacy assurances like “no prompt data is used for training.”

One interpretation? Users are choosing the right tool for the job, regardless of corporate policy. If a purpose-built AI app provides superior results, it’s hard to argue with the logic of using it—even unofficially.

A Long Road to Skill and Scale

Despite the buzz, only 5% of business users actively use GenAI frequently, and even fewer have developed advanced skills (often referred to as "superprompters"). These power users quietly gain value and get work done quickly, but they rarely broadcast how they do it—making them difficult to identify or replicate across the org.

So while companies are rushing to embed AI into their products and processes, the vast majority of everyday business users are still figuring out how to engage meaningfully with GenAI. Skills are undeveloped, processes are immature, and scale is elusive.

How NROC Security Can Help

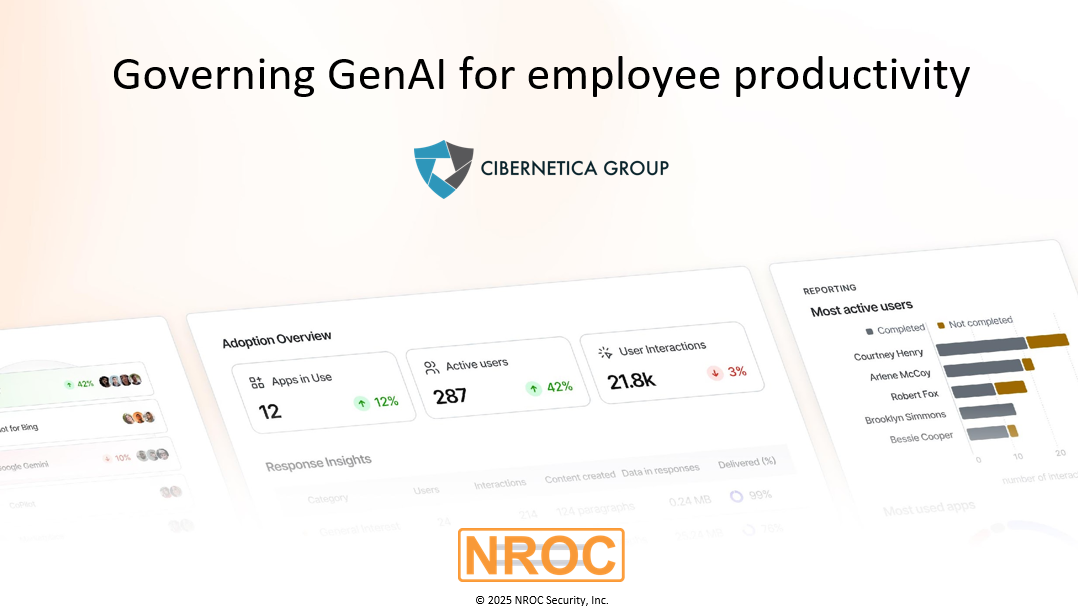

NROC Security provides visibility, guardrails and compliance with a unique advantage - we have no endpoint plugins or agents required. That means we can deliver full organizational visibility and insights in just 4 days—not 4 months or more.

Want to know more? Visit our solution page to see how we’re helping enterprises enable GenAI safely and efficiently. In case you are wondering how the enforcement of the GenAI acceptable use policy should be done, you can download our enforcement standard - template.