Google indexes shared ChatGPT conversations

Shared ChatGPT chats appear now in Google search results.

Have you been sharing your ChatGPT conversations with colleagues or friends? You might want to think twice. Google has started indexing these shared chats, and what you've discussed could be more public than you realize.

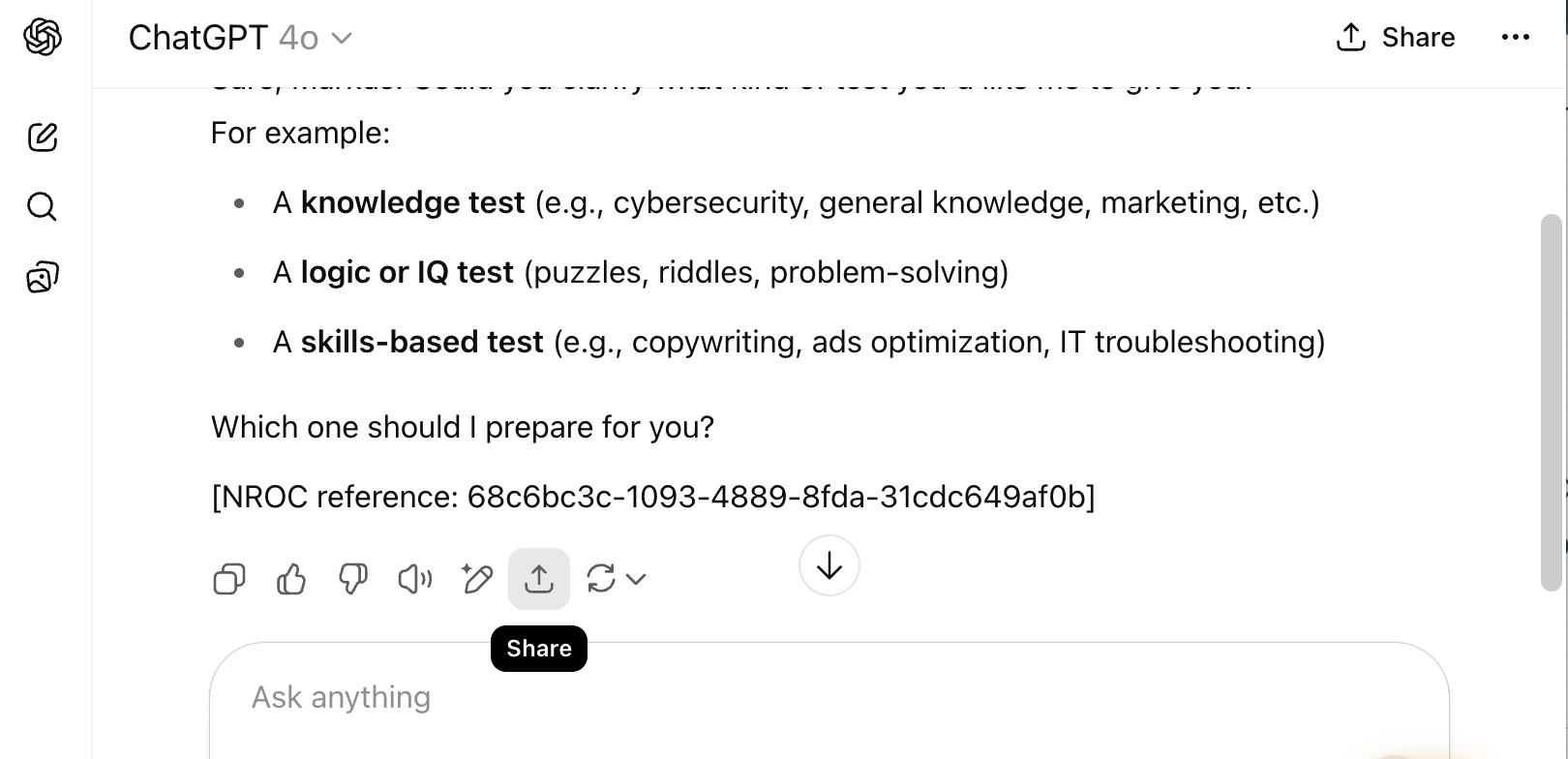

If you use ChatGPT, you're probably familiar with the handy "share" button that lets you easily pass along your conversations. It's great for collaboration, right? But here's the catch: there's no clear warning that these shared chats could be indexed by Google if you use data-training allowing plans such as non-logged in, or Free, Plus plan. As we've highlighted in our previous posts on GenAI app privacy settings, your prompt data can be used for training. Now, it appears this training data — your shared conversations — is also available in Google search results.

While your personal identity isn't directly revealed, the context of the conversation could easily hint at who you are or the company you work for. Imagine sensitive corporate data, discussed within a shared chat, suddenly becoming searchable by anyone.

Consider this scenario: A company decides to use ChatGPT, but maybe not everyone gets a licensed account, or someone accidentally uses their personal login. An organizational habit of sharing conversations via chat links quickly develops. And then, boom — it's on Google.

The simplest and most effective solution? Never send company-sensitive topics into your prompts. That way, even if a chat is inadvertently shared, you won't have to worry about a data breach.

---

Edit: Late yesterday ChatGPT announced in X that they roll back this functionality.

***

NROC Security helps organizations get visibility and set guardrails (redact, ask "are you sure" or block) for GenAI apps, like ChatGPTs, copilots and the likes. We enable organizations to realize the productivity benefits from those apps while mitigating risks and compliance issues. We inspect both prompts and responses, which is unique, and deliver from the cloud with no agents or browser plugins. We are expanding from personal productivity AI to business process AI by governing the flow of proprietary data -- 'the right docs to the right AI'.