CISO Guide to Securing Employee Use of GenAI

Best Practices for Securing Public GenAI Apps and LLM Apps in the Enterprise

Public GenAI apps, like ChatGPTs and Copilots, are now widely used at work. It's not without its risks. The providers have created a maze of data protection promises, which mean that a wrong AI, subscription, login, setting or geo may mean that the end user prompt becomes the AI maker's to use. At the same time, NROC Security has seen that every 100 prompts contain 32 instances of PII.

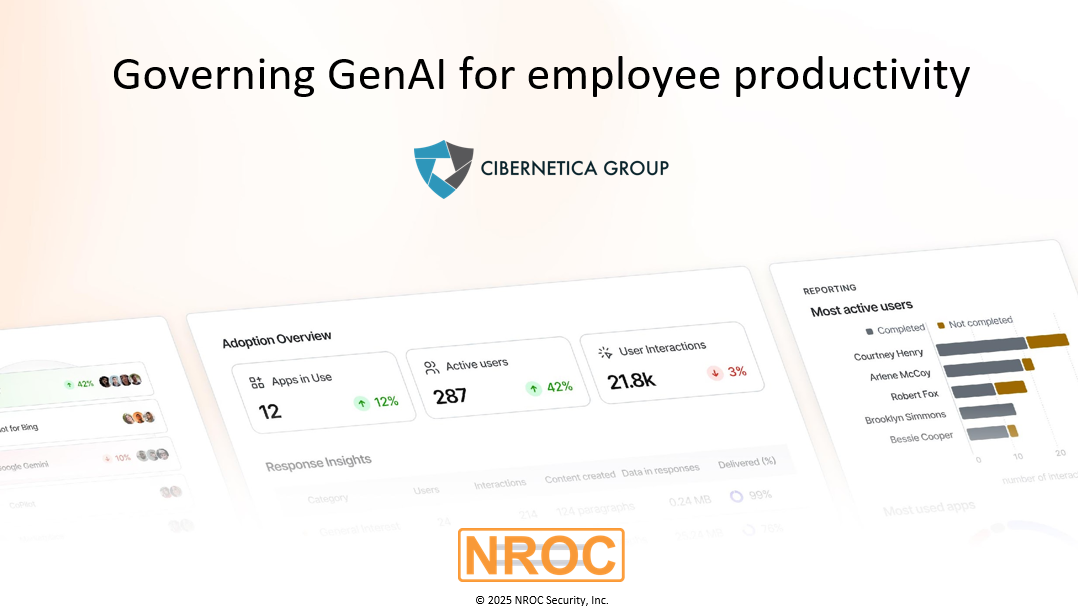

Fundamentally, Gen AI apps represent a new breed of software where use cases are invented on the fly, user inputs are unpredictable, any data can be used, and there is no promise of the accuracy of the outputs. This creates several issues for traditional security architectures: lack of visibility, inability to to control user-driven data exposure, unable to monitor potentially inaccurate outputs, as well as gaps with identity and access management. The end users need guidance, not friction. The security team needs effective controls, and the compliance team needs evidence of policy compliance.

This guide, based on over 130 practitioner interviews, defines the issues and suggests best practices how CISO teams need to go beyond the traditional playbook. The guide concludes how well executed AI security can build trust in AI usage and provide insights for shaping the AI agenda. Security can be an accelerator for organizational learning and innovation.