How AI Champions drive personal productivity and ROI from GenAI

Practical ways to build end user confidence, skills, security, and governance so employees can realize productivity gains from GenAI tools like ChatGPT.

The enthusiasm is already there.

Your CEO is openly pro-AI and talks about it as a strategic advantage. Your CFO baked the AI productivity gains to the budgets. Your HR leadership brings up AI every time you ask for additional headcount.

But data from our customer base paints a very different picture. Only 15-30% of desktop workers use GenAI apps weekly and very few use it frequently. The general skill level is very low. And now we are talking about personal productivity AI—ChatGPTs, Geminis, Copilots and the like—where it is up to every ‘knowledge worker’ to devise his or her own use case. In the same organizations, we see IT initiatives to automate business processes with AI. But we very seldom see a structured change management effort to drive everyday productivity ROI from this widely available capability. The much discussed “AI capability overhang” is real.

What entered the organization as a gadget now needs to deliver the productivity ROI - every day.

This is where AI champions, security leaders and compliance professionals need to come together.

From our experience as a cybersecurity innovator in the GenAI security space, we’ve learned that personal productivity gains from tools like ChatGPT don’t just appear. They require confidence, skills, security, and governance. Below are the practical steps to make it happen.

1. Inspire confidence before you demand results

The biggest barrier to GenAI adoption is rarely technology. It’s hesitation.

Employees worry about:

- Using AI “the wrong way”

- Leaking sensitive data

- Looking unskilled or replaceable

- Breaking unspoken rules or those in the hard-to-comprehend policy documents

AI champions must address confidence first.

That means clearly articulating:

- What is allowed (and encouraged)

- What is prohibited (and why)

- Where responsibility sits when AI is used in daily work

In cybersecurity, ambiguity creates risk. The same is true for AI adoption. Purpose-built GenAI security tooling can greatly inspire this confidence by signaling that usage is encouraged, guiding when an employee bumps to guardrails and providing a safety net against leaking data by mistake.

2. Build skills that map to real work

Most employees don’t need to become prompt engineers. They need to become better versions of their current roles.

Effective AI skill building focuses on:

- Recognizing daily tasks suitable for AI, and when not to use

- Prompting skills for research, summarizing tasks - where time is really saved

- Learning how to spot hallucinations and remembering the personal responsibility

In practice, this means:

- Role-specific examples (sales, engineering, finance, HR), delivered in short, practical enablement sessions

- Shared internal prompt libraries tied to real use cases

Clear stats of usage per department and sampling of use cases guide the AI team to focus training to the right topics and level.

3. Spread best practices horizontally, not top-down

Our data shows vast differences in usage between the ‘superprompters’ and everybody else. You should know who your most proficient users are and how they are using the tech. They have figured something out. Giving them credit and promoting their ideas internally is the most effective way to broaden the usage.

In this situation, the AI teams should act as connectors, and share ideas, workflows, and prompts across the organization. This turns isolated wins into institutional knowledge.

Insights of the best use cases benefit not only the colleagues of the ‘superprompters’, they are also source material for evolving the AI strategy. You get candidates for the next business process to automate and the next experience to improve with AI.

4. Measure what matters for continuous improvement

There is no single measure or dashboard that quantifies the personal productivity ROI.

It is rather elusive. Did more get done? Was the ‘more’ of any value? Did we realize the productivity gain that the CFO already took into account?

The only way is to go business function by business function and tie the usage of AI to the changes in the KPIs of that function. Splitting populations between heavy and light AI usage is another practical method. Just avoid the classical correlation vs. causality trap. A good sales person was already good before AI. And that skill caused him or her to adopt AI early on.

Tying it to the KPIs of the function will keep it real in terms of business. For example, if there are unit cost metrics, cycle time metrics or quality metrics and they did not move, the ROI was not there. However, even anecdotal evidence of productivity gains is golden. Then there is hope. It’s then either a usage or skill issue, which both are solvable problems.

This area requires surprisingly much resources from the AI team, but it’s a necessary investment. Measurement is not about surveillance. It’s about learning. Without that quantitative work, the organization does not know what’s possible, the CFO’s budget Excel is not attached to reality, and misplaced workforce cuts deteriorate the performance of the organization.

5. Govern GenAI as a structured business initiative

Most organizations have initiated AI governance by now. In general, the composition of those teams is the right one: business, IT, security, compliance and HR. Most AI Boards do not think of themselves sufficiently as change management projects. And many lack structured efforts to drive personal productivity AI for everyday productivity ROI. Publishing an acceptable use policy and making prompt training available does not yet count as change management.

AI strategy guides governance. Maybe personal productivity AI is underrepresented already in the strategy, and that explains the lack of change management drive. We all remember past change management efforts: solution selling, or becoming customer-driven. They succeeded if they were organized business initiatives and the team had incredible persistence in their efforts. Otherwise, the projects withered away with minimal impact.

Metrics on usage, use cases, skill levels, training levels — and ultimately impact to business process KPIs are what the AI team should discuss. If that happens the team is onto something that drives real business value.

Join us on March 4th, 2026 as we show you how productivity-first governance for GenAI can:

- Turn AI policies into measurable productivity gains

- Balance compliance, security, and employee empowerment

- Address new AI legislations that require proof of policy compliance

Register now for the webinar here.

The Role of the AI Champion

The AI Champion is the ultimate business owner of the results from AI. He or she sits at the intersection of strategy and execution, chairs the AI governance bodies, and is the visible face to the organization to drive the change.

What started as a gadget, needs to drive personal productivity across the organization, and produce measurable business outcomes. When done well, the effort invites staff to innovate their own tasks, which totally transforms the AI vs. human conversation. Employees actually appreciate progress and feel they work for a modern corporation that adopts AI responsibly.

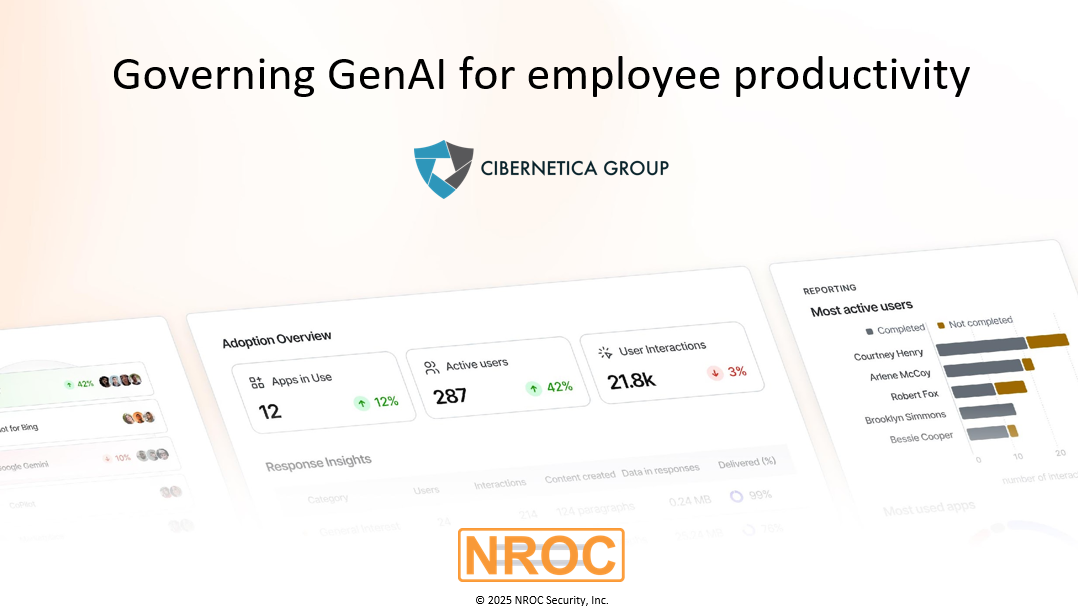

At NROC Security, we work with organizations to bring visibility, control and evidence to employee GenAI usage – while enabling innovation and productivity. If you are thinking about how to safely drive personal productivity GenAI at scale in 2026, now is the time to start a conversation.

.jpg)