AI Breaking bad?

Misusing GenAI apps for ‘Bad’ purposes

Along with the hit series title, Breaking Bad is about a chemistry teacher diagnosed with fatal lung cancer starting to work in the methamphetamine business to secure his family’s future, in the AI world, that is happening as well. AI today is seen to help and speed up human work in order to help human’s brain power to focus on managing the projects or the big-picture and planning forward to feed new instructions to AI. Novel target, as with the end-part “…securing family’s future”.

What about the mid-part - dark side - then? There is already discussion ongoing about various problems with AI Apps. The initial ones is how to miss-use the apps for ‘Bad’ purposes. There is talk about LLM-OWASP-top-10, but also other techniques like ’Skeleton key’ among others. This is just to get the AI to explain the darker side of humanity from history, e.g. get a recipe for Molotov’s cocktail as an example.

In protecting a corporate setup, first concern is the above mentioned techniques for ‘AI Breaking bad’ actually enable malicious actors or competitors to search for unpublished information about organizations with prompt engineering. Or wider public get miss-informed about a company’s position with e.g. its financials or its customer reputation. All of this, is of course possible only if the LLM’s are trained with the proprietary information of the organization, without having right guardrails in place.

The second issue is AI ethics. In a large enterprise, there are many people, and if one assumes that the employees represent humanity in a wider perspective fairly accurately: There are people with health issues, people with personality issues and people with various levels of motivation - as is the situation in the whole population on our planet. This is normal, but the problem with ethics becomes relevant when an employee starts utilizing the company assets, AI Apps, to build up their own political agendas or other similar things. In a corporation, that is not a wanted behaviour (and most probably against any company values and acceptable use policy).

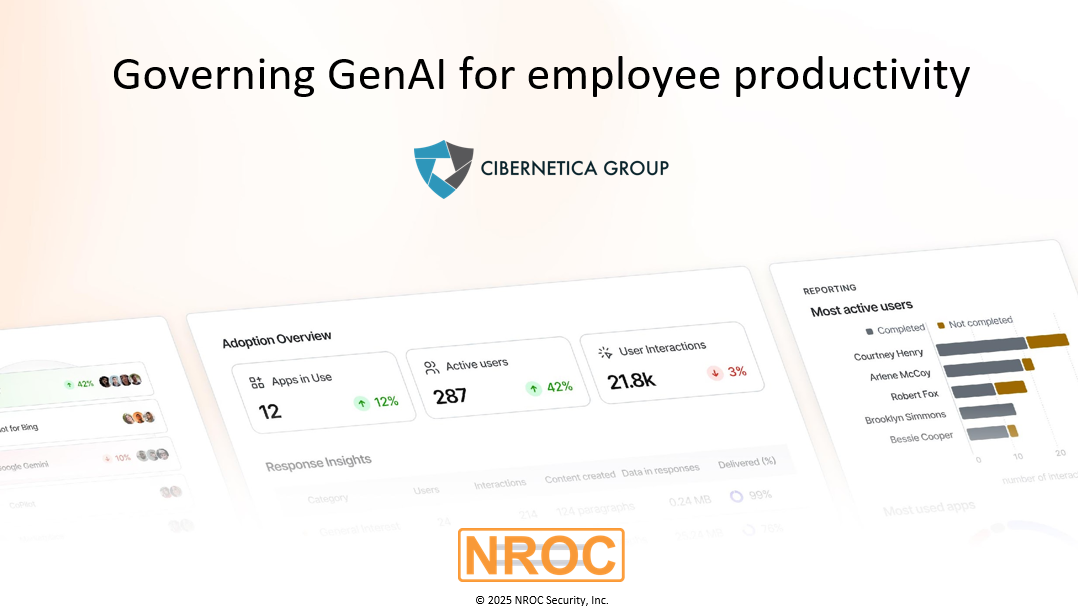

Companies should thus be ensuring that the ability for employees to use the new, productivity skyrocketing tooling is also managed in a way that the company knows what was done with AI, by whom and when. This makes it easier to open up and accelerate adoption and get the gains to the organization - making stakeholders happy and in short - knowing the usage and having guardrails is vital in unleashing creativity of business users.

NROC was founded to innovate governance and data protection for the business use of AI. We are always keen to share our learnings and innovate with practitioners. For more information please visit www.nrocsecurity.com