Prevent data leaks, govern shadow AI, prove compliance, and save 50% on GenAI subscriptions

On-demand webinar:

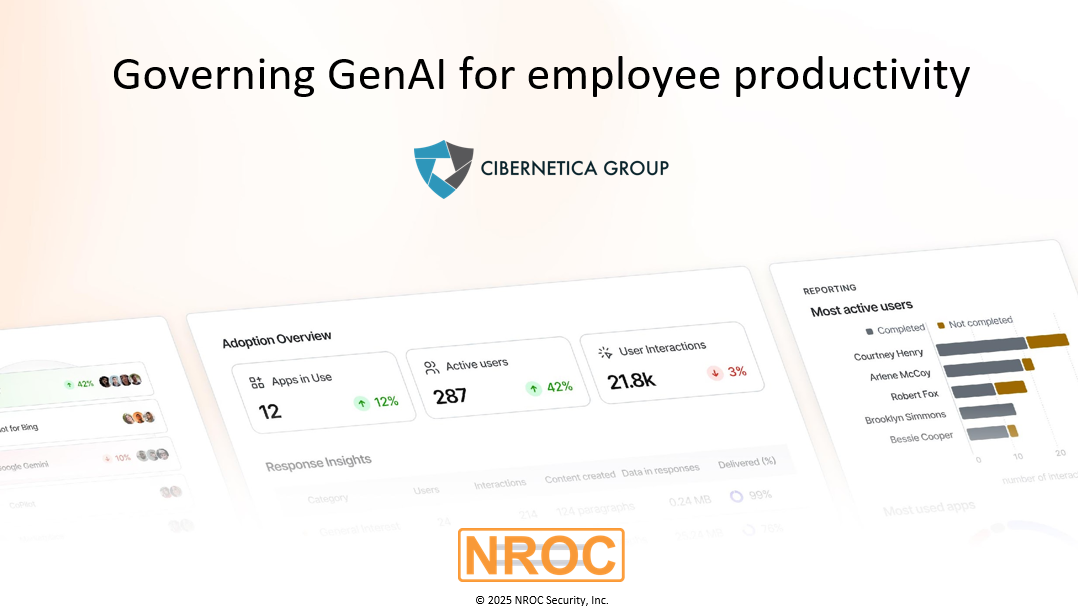

Governing GenAI for employee productivity with Cibernetica and NROC Security

By looking at the real usage, data flows and risk exposures

By automatically gating access based on data protection terms and risk profiles

By enforcing policies on data usage and generated content based on job role needs

By adding a safety net that removes accidental data leaks

Governing GenAI for employee productivity with The Cibernetica Group and NROC Security

See how The Hornblower Group drives responsible GenAI use while unlocking employee productivity